Spring Boot Autoscaling on Kubernetes

Autoscaling based on the custom metrics is one of the features that may convince you to run your Spring Boot application on Kubernetes. By default, horizontal pod autoscaling can scale the deployment based on the CPU and memory. However, such an approach is not enough for more advanced scenarios. In that case, you can use Prometheus to collect metrics from your applications. Then, you may integrate Prometheus with the Kubernetes custom metrics mechanism.

Preface

In this article, you will learn how to run Prometheus on Kubernetes using the Helm package manager. You will use the chart that causes Prometheus to scrape a variety of Kubernetes resource types. Thanks to that you won’t have to configure it. In the next step, you will install the Prometheus Adapter. You can also do that using the Helm package manager. The adapter acts as a bridge between the Prometheus instance and the custom metrics API. Our Spring Boot application exposes metrics through the HTTP endpoint. You will learn how to configure autoscaling on Kubernetes based on the number of incoming requests.

Source code

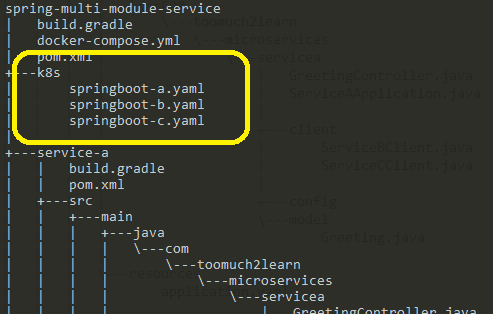

If you would like to try it by yourself, you may always take a look at my source code. In order to do that, you need to clone my repository sample-spring-boot-on-kubernetes. Then you should go to the k8s directory. You can find there all the Kubernetes manifests and configuration files required for that exercise.

Our Spring Boot application is ready to be deployed on Kubernetes with Skaffold. You can find the skaffold.yaml file in the project root directory. Skaffold uses Jib Maven Plugin for building a Docker image. It deploys not only the Spring Boot application but also the Mongo database.

apiVersion: skaffold/v2beta5

kind: Config

metadata:

name: sample-spring-boot-on-kubernetes

build:

artifacts:

- image: piomin/sample-spring-boot-on-kubernetes

jib:

args:

- -Pjib

tagPolicy:

gitCommit: {}

deploy:

kubectl:

manifests:

- k8s/mongodb-deployment.yaml

- k8s/deployment.yaml

The only thing you need to do to build and deploy the application is to execute the following command. It also allows you to access HTTP API through the local port.

$ skaffold dev --port-forwardFor more information about Skaffold, Jib and a local development of Java applications, you may refer to the article Local Java Development on Kubernetes

Kubernetes Autoscaling with Spring Boot – Architecture

The picture visible below shows the architecture of our sample system. The horizontal pod autoscaler (HPA) automatically scales the number of pods based on CPU, memory, or other custom metrics. It obtains the value of the metric by pulling the Custom Metrics API. In the beginning, we are running a single instance of our Spring Boot application on Kubernetes. Prometheus gathers and stores metrics from the application by calling HTTP endpoint /actuator/promentheus. Consequently, the HPA scales up the number of pods if the value of the metric exceeds the assumed value.

Run Prometheus on Kubernetes

Let’s start by running the Prometheus instance on Kubernetes. In order to do that, you should use the official Prometheus Helm chart. Firstly, you need to add the required repository.

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

$ helm repo add stable https://charts.helm.sh/stable

$ helm repo updateThen, you should execute the Helm install command and provide the name of your installation.

$ helm install prometheus prometheus-community/prometheusIn a moment, the Prometheus instance is ready to use. You can access it through the Kubernetes Service prometheus-server. It is available on port 443. By default, the type of service is ClusterIP. Therefore, you should execute the kubectl port-forward command to access it on the local port.

Deploy Spring Boot on Kubernetes

In order to enable Prometheus support in Spring Boot, you need to include Spring Boot Actuator and the Micrometer Prometheus library. The full list of required dependencies also contains the Spring Web and Spring Data MongoDB modules.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-mongodb</artifactId>

</dependency>After enabling Prometheus support, the application exposes metrics through the /actuator/prometheus endpoint.

Our example application is very simple. It exposes the REST API for CRUD operations and connects to the Mongo database. Just to clarify, here’s the REST controller implementation.

@RestController

@RequestMapping("/persons")

public class PersonController {

private PersonRepository repository;

private PersonService service;

PersonController(PersonRepository repository, PersonService service) {

this.repository = repository;

this.service = service;

}

@PostMapping

public Person add(@RequestBody Person person) {

return repository.save(person);

}

@PutMapping

public Person update(@RequestBody Person person) {

return repository.save(person);

}

@DeleteMapping("/{id}")

public void delete(@PathVariable("id") String id) {

repository.deleteById(id);

}

@GetMapping

public Iterable<Person> findAll() {

return repository.findAll();

}

@GetMapping("/{id}")

public Optional<Person> findById(@PathVariable("id") String id) {

return repository.findById(id);

}

}You may add a new person, modify and delete it through the HTTP API. You can also find it by id or just find all available persons. The Spring Boot Actuator generates HTTP traffic statistics per each endpoint and exposes it in the form readable by Prometheus. The number of incoming requests is available in the http_server_requests_seconds_count metric. Consequently, we will use this metric for Spring Boot autoscaling on Kubernetes.

Prometheus collects a pretty large set of metrics from the whole cluster. However, by default, it is not gathering the logs from applications. In order to force Prometheus to scrape the particular pods, you must add annotations to the Deployment as shown below. The annotation prometheus.io/path indicates the context path with the metrics endpoint. Of course, you have to enable scraping for the application using the annotation prometheus.io/scrape. Finally, you need to set the number of HTTP port with prometheus.io/port.

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-spring-boot-on-kubernetes-deployment

spec:

selector:

matchLabels:

app: sample-spring-boot-on-kubernetes

template:

metadata:

annotations:

prometheus.io/path: /actuator/prometheus

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

labels:

app: sample-spring-boot-on-kubernetes

spec:

containers:

- name: sample-spring-boot-on-kubernetes

image: piomin/sample-spring-boot-on-kubernetes

ports:

- containerPort: 8080

env:

- name: MONGO_DATABASE

valueFrom:

configMapKeyRef:

name: mongodb

key: database-name

- name: MONGO_USERNAME

valueFrom:

secretKeyRef:

name: mongodb

key: database-user

- name: MONGO_PASSWORD

valueFrom:

secretKeyRef:

name: mongodb

key: database-passwordInstall Prometheus Adapter on Kubernetes

The Prometheus adapter pulls metrics from the Prometheus instance and exposes them as the Custom Metrics API. In this step, you will have to provide configuration for pulling a custom metric exposed by the Spring Boot Actuator. The http_server_requests_seconds_count metric contains a number of requests received by the particular HTTP endpoint. To clarify, let’s take a look at the list of http_server_requests_seconds_count metrics for the multiple /persons endpoints.

You need to override some configuration settings for the Prometheus adapter. Firstly, you should change the default address of the Prometheus instance. Since the name of the Prometheus Service is prometheus-server, you should change it to prometheus-server.default.svc. The number of HTTP port is 80. Then, you have to define a custom rule for pulling the required metric from Prometheus. It is important to override the name of the Kubernetes pod and namespace used as a metric tag by Prometheus. There are multiple entries for http_server_requests_seconds_count, so you must calculate the sum. The name of the custom Kubernetes metric is http_server_requests_seconds_count_sum.

prometheus:

url: http://prometheus-server.default.svc

port: 80

path: ""

rules:

default: true

custom:

- seriesQuery: '{__name__=~"^http_server_requests_seconds_.*"}'

resources:

overrides:

kubernetes_namespace:

resource: namespace

kubernetes_pod_name:

resource: pod

name:

matches: "^http_server_requests_seconds_count(.*)"

as: "http_server_requests_seconds_count_sum"

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>,uri=~"/persons.*"}) by (<<.GroupBy>>)Now, you just need to execute the Helm install command with the location of the YAML configuration file.

$ helm install -f k8s\helm-config.yaml prometheus-adapter prometheus-community/prometheus-adapterFinally, you can verify if metrics have been successfully pulled by executing the following command.

Create Kubernetes Horizontal Pod Autoscaler

In the last step of this tutorial, you will create a Kubernetes HorizontalPodAutoscaler. HorizontalPodAutoscaler automatically scales up the number of pods if the average value of the http_server_requests_seconds_count_sum metric exceeds 100. In other words, if your instance of application receives more than 100 requests, HPA automatically runs a new instance. Then, after sending another 100 requests, an average value of metric exceeds 100 once again. So, HPA runs a third instance of the application. Consequently, after sending 1k requests you should have 10 pods. The definition of our HorizontalPodAutoscaler is visible below.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: sample-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: sample-spring-boot-on-kubernetes-deployment

minReplicas: 1

maxReplicas: 10

metrics:

- type: Pods

pods:

metric:

name: http_server_requests_seconds_count_sum

target:

type: AverageValue

averageValue: 100Testing Kubernetes autoscaling with Spring Boot

After deploying all the required components you may verify a list of running pods. As you see, there is a single instance of our Spring Boot application sample-spring-boot-on-kubernetes.

In order to check the current value of the http_server_requests_seconds_count_sum metric you can display the details about HorizontalPodAutoscaler. As you see I have already sent 15 requests to the different HTTP endpoints.

Here’s the sequence of requests you may send to the application to test autoscaling behavior.

$ curl http://localhost:8080/persons -d "{\"firstName\":\"Test\",\"lastName\":\"Test\",\"age\":20,\"gender\":\"MALE\"}" -H "Content-Type: application/

json"

{"id":"5fa334d149685f24841605a9","firstName":"Test","lastName":"Test","age":20,"gender":"MALE"}

$ curl http://localhost:8080/persons/5fa334d149685f24841605a9

{"id":"5fa334d149685f24841605a9","firstName":"Test","lastName":"Test","age":20,"gender":"MALE"}

$ curl http://localhost:8080/persons

[{"id":"5fa334d149685f24841605a9","firstName":"Test","lastName":"Test","age":20,"gender":"MALE"}]

$ curl -X DELETE http://localhost:8080/persons/5fa334d149685f24841605a9After sending many HTTP requests to our application, you may verify the number of running pods. In that case, we have 5 instances.

You can also display a list of running pods.

Conclusion

In this article, I showed you a simple scenario of Kubernetes autoscaling with Spring Boot based on the number of incoming requests. You may easily create more advanced scenarios just by modifying a metric query in the Prometheus Adapter configuration file. I run all my tests on Google Cloud with GKE. For more information about running JVM applications on GKE please refer to Running Kotlin Microservice on Goggle Kubernetes Engine.